As the IT threat landscape changes, organizations must adapt to defend against ransomware and cybercrime. Though some ways of defending against these threats have changed, backup strategies have remained the same. The 3-2-1 rule and backup strategy is a prime example. While the 3-2-1 backup rule offers trusted guidelines for the number and distribution of backup copies a company keeps, it doesn’t extend to other techniques that enhance the security and safety of organizational backups. In an era of evolving threats, it’s worthwhile to re-examine ways to enhance the 3-2-1 rule to more securely protect backups.

What is the 3-2-1 backup strategy?

System administrators have long observed the 3-2-1 backup rule for data protection. I’ve seen Quest customers adopt many variants to the rule, which is typically defined as:

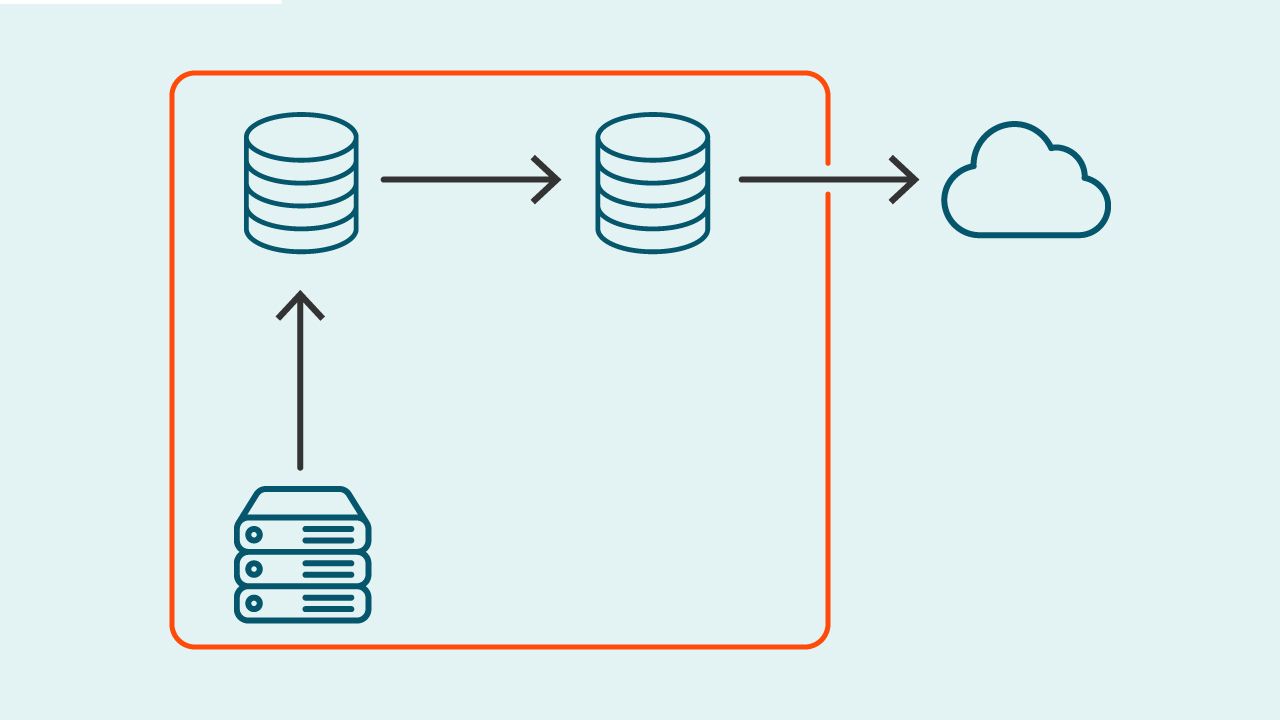

- 3 copies of all protected data

- stored in 2 different locations

- one of which is an off-site location

Those are solid, minimal goals for a data protection strategy. But most companies would be well advised to look at the 3-2-1 rule as the bare minimum. Why? Because additional enhancements to the 3-2-1 rule can give you better protection against ransomware, help lower your storage and bandwidth costs and make your business more cyber-resilient should an outage or disaster strike.

What challenges do organizations have in setting up a 3-2-1 backup? What mistakes do they make?

The 3-2-1 backup rule is the bare minimum of an effective data backup strategy and a good starting point. But despite the best intentions some companies encounter challenges even implementing the 3-2-1 rule.

Breaking the 3: Not enough copies

A common mistake is to ignore the importance of having 3 copies of protected data. On occasion, some admins balk at the idea of going to the cloud or investing in a tape library for that third copy.

“That’s overkill,” some admins tell me. “I’ve already got two copies. Why do I need a third?” But when I dig a little deeper, I usually discover that it’s a matter of cost. They’re backing up to disks that they own – maybe even disks that have been repurposed from something else – instead of spending budget to have a secondary location.

Breaking the 2: No second location

A common scenario is that organizations will back up their data – that’s wise – then store the backup on a server in their data center – that’s unwise. They’ve broken the 3-2-1 rule already by ignoring the importance of storing data at two locations with another being off-site.

Maybe they’re too small to have a second data center of their own, or they don’t have the staff to select, set up and maintain that second location. However, even the smallest organizations are not too small to be the target of a ransomware attack or a natural disaster.

Breaking the 1: No offsite storage

The most worrisome situation I see is when I urge a company to store a copy off site – the 1-rule – and the admins say, “We’re not allowed to do that.” Their business operates with regulations and standards that the data must be protected and cannot be stored offsite. But this is often misunderstood to mean that they can’t store any of their data in the cloud.

Perhaps they’re conflating that with a regulation against storing data outside of their home country; such regulations are common. But most people who believe they can’t store backups in the cloud are frequently misinterpreting what’s required of them or the limitations on where they can store their data.

Why is that the most worrisome circumstance? Because they’re either misinterpreting the regulation internally or getting bad advice from someone externally. In either event, the effect is the same: they’re exposed.

Moving beyond 3-2-1 backups: 4 guidelines

If you want to move beyond the 3-2-1 backup rule, here are four guidelines for even more rigorous data protection.

1. Deduplication

Use deduplication (“dedupe”) on your data as you back it up.

In data deduplication, software algorithms find blocks of duplicated data, such as in a data backup set, then replace them with pointers to already-stored copies. In a backup, that means examining data in files as you back them up, then storing only the blocks that you have modified since the last time you backed up.

Deduplication offers several advantages – some affect your network; others affect your budget.

- Storage space – If you back up and store a 100GB data set every day for 12 days, you’ll accumulate 1.2 TB of data. But how many files in that data set never change from day 1 to day 12? Most of them, probably. Yet you’re filling up storage with 12 copies of the same file, which is a waste of space and money.

- Network — Sending blocks of redundant data from your network devices to backup servers, and then to storage, saturates your network paths. You’re adding serious traffic to your network while not protecting your data anymore effectively.

- Devices — That congestion applies to all the devices along the backup path – switches, routers, network appliances – whether the files stay there or are just passing through. That’s like throwing away good processor cycles and memory on data you don’t need.

- Time — When you’re accustomed to having your applications and data available 24/7, you don’t look forward to a performance hit from backing up. That’s why you plan on a backup window – usually in the middle of the night – when the backup operation won’t slow anybody down. But that window ends eventually, and you don’t want to squander it on redundant data.

If your backup target supports deduplication, you can lower your overall storage costs. If it also performs deduplication before sending the data across the network, you can reduce network congestion. Those advantages are especially important when you save your backup data to cloud storage.

2. Encryption

All backup data should be encrypted.

That extends to backup data stored offsite, which can and does get breached. Encrypting the data you store offsite will reduce the chance that a breach will compromise company data.

In the past, there may have been reasons not to apply encryption to backup data sets, like the extra time or CPU cycles it takes to apply it. But over time, encryption has become so well integrated that there is no excuse for not encrypting all of your backup data.

This rule also underscores the importance of encryption key management because your encryption is only useful to the extent that your encryption keys are securely managed. Without a highly regarded method for managing encryption keys, the keys are at risk of ending up in the wrong hands. Such a method includes strong encryption ciphers and encryption key rotation, which respected commercial products offer.

Resist the temptation to regard keys as just another password, because they aren’t. If keys are never changed, rotated or managed with a proper vault, then anyone with access to them can decrypt any data on which they were used.

3. Immutability

Store your backups with immutability to help protect against ransomware.

Most ransomware attacks follow a pattern of first finding and deleting the copies you make of your backup data sets. Why? To ensure that once your organization is infected, restoring from backup is not option. That forces you to pay the ransom. Storing data with immutability ensures that your backups can’t be deleted or modified by anybody – not by you, not by your CIO, not by the manufacturer of your backup system.

Immutability is a feature of your backup software. Once it writes your data, it configures a setting that effectively changes the status of the data to read-only. That means that the data cannot be modified or deleted. And, in combination with the previous rule about encryption, you can ensure that, even if attackers manage to exfiltrate or read your data, all they’ll see is gibberish.

The best use of immutability is to apply it to all copies of backed-up data in all locations. If you decide to implement it – and you should – keep in mind that it will introduce a new variable to your data protection strategy. That’s because it requires that you plan for sufficient storage capacity to hold your immutable backups for as long as you configure them to remain immutable. (Remember: Nobody – not even you – can delete them before then. If that were possible, they wouldn’t be immutable.) If you find that storing immutable backups will require more space than you have, you’re better off increasing space than shortening your period of retention.

4. Service-level agreements (SLA)

Test your restore procedures to ensure you can meet your SLAs.

Suppose you tell your executive staff, “In the event of an outage or disaster, we can recover from backups and have everyone back to work within 12 hours.” That recovery time objective (RTO) becomes your SLA. People are going to depend on it, so before you make a promise like that, you’d better be sure you can make good on it.

That’s where testing comes in. I’ve seen too many disaster recovery plans fall flat because they were never tested until the bleak day when they were pressed into service. And that’s precisely the day on which you don’t want to find out that it takes, say, 36 hours to return to productivity.

Protect all your systems, applications and data.

Your SLA is related to your recovery time and recovery point objective (RTO and RPO), or the point in time back to which you want to be able to recover your data. SLAs lead many companies to implement more-frequent backups and continuous data protection (CDP). The more frequently you back up, the less changed data there is to back up in each pass.

When you back up changed data once a day, a lot of changes can accumulate between passes. But with modern backup tools and CDP, you could back up every four hours, every two hours or even every hour, accumulating far fewer changes for each pass. As a result, you have a better chance of meeting your RPO. You also have shorter backup windows and less data being backed up in each pass. That helps you observe your SLA.

Conclusion

Backing up data once a day is just asking for trouble. Data changes too frequently. But old habits die hard, so it’s important for backup admins to understand what’s important in the data they’re backing up. Not all data needs to be backed up every hour. But the files and databases that your business would need to recover most urgently in a disaster need a higher level of protection.

The 3-2-1 backup rule is a time-honored way of answering the questions “How many?” and “Where?” These four guidelines around the 3-2-1 backup rule represent a fresh look that answers the questions of “How can we efficiently back up our data?” and “How can we better protect our backups?” in a changing threat landscape.