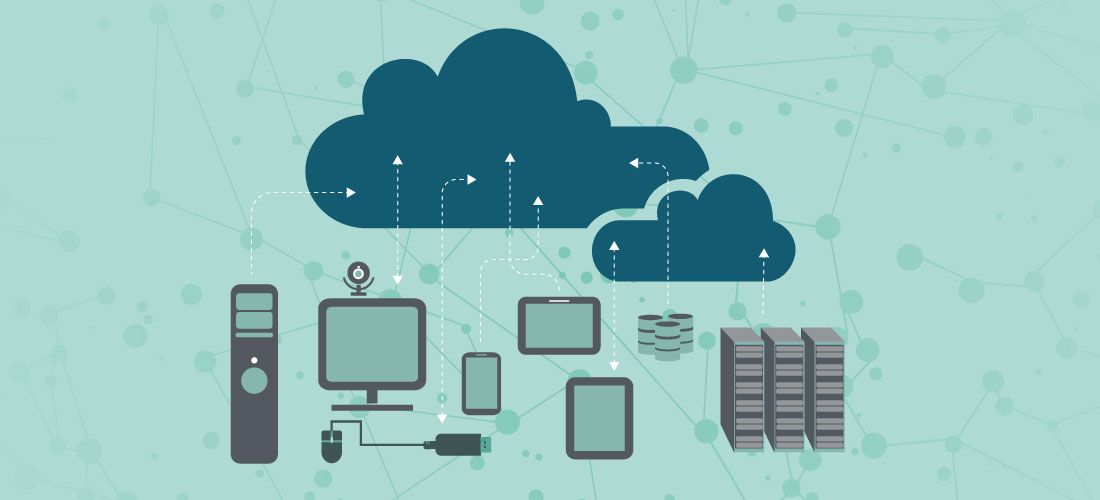

As organizations are increasingly under pressure to back up and recover data quickly, accurately and within budget, data growth in the cloud continues to make backup and recovery increasingly complex.

Backing up all your data is a challenge because, in essence, you’re at the mercy of the growing amount of data you must retain and manage. This, in turn, impacts:

- How much storage you consume

- The associated rising storage costs

- Increasing backup and recovery windows

- And ultimately, how prepared you are to recover from a cyberattack or disruption event

The result for many organizations is a risky slowing down of backup and recovery methods, an increase in cloud storage costs, and a lack of true disaster recovery readiness.

So, how can organizations address the natural impacts of data growth while meeting the backup and recovery demands of the organization?

In this blog, we will discuss how data optimization can solve many of these challenges, focusing on three specific optimization techniques and when it’s best to use them.

Three key data optimization strategies

When it comes to optimizing backups, there are three key strategies that will help you get the most out of your data backups:

- Data deduplication

- Data compression

- Storage tiering.

Let’s dive deeper into what each approach entails and when to apply them in your backup process.

Data deduplication

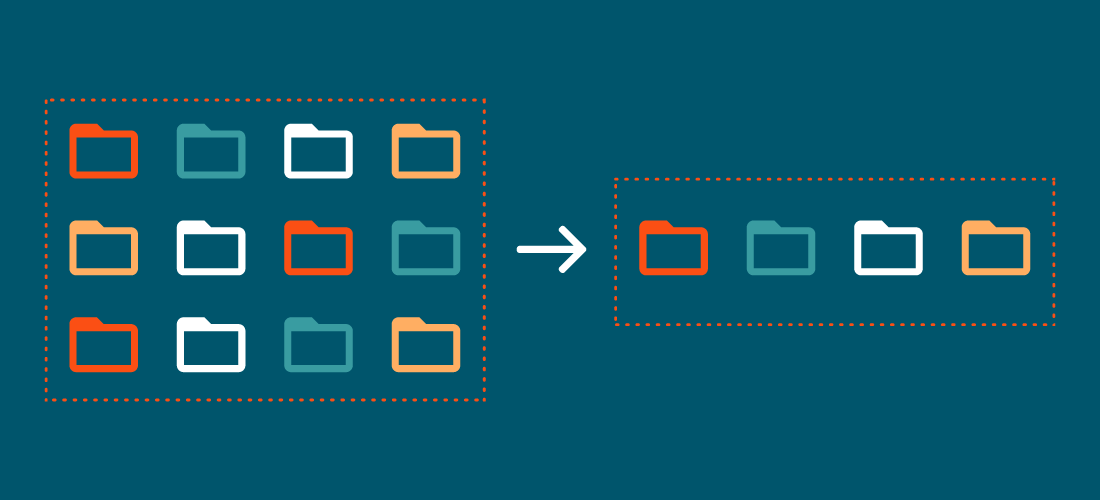

The process of data deduplication is based on finding and eliminating blocks of duplicated data. It involves examining data within files and storing only the blocks that have changed since the previous backup operation. Using an index, deduplication tracks unique blocks or files being deduplicated.

When a duplicate block or file is found, it is replaced by either the hash (blocks) or a file stub (files) to reduce the overall size of the backup data being stored. Deduplication can achieve (depending on how it’s implemented) a space savings well into the 90%+ range.

Block-level deduplication assumes a consistent block size (e.g., 4K, 8K, 16K, 32K). Keep in mind that the type of backup data being deduplicated may be better suited for a larger or smaller block size in order to find more matches and achieve the greatest size reduction. There’s also variable-block deduplication, which identifies patterns in the data, adjusts the block size used, and can even align block start and end points to get even more space savings.

Data deduplication can be processor-intensive so there are some considerations to think about in your approach:

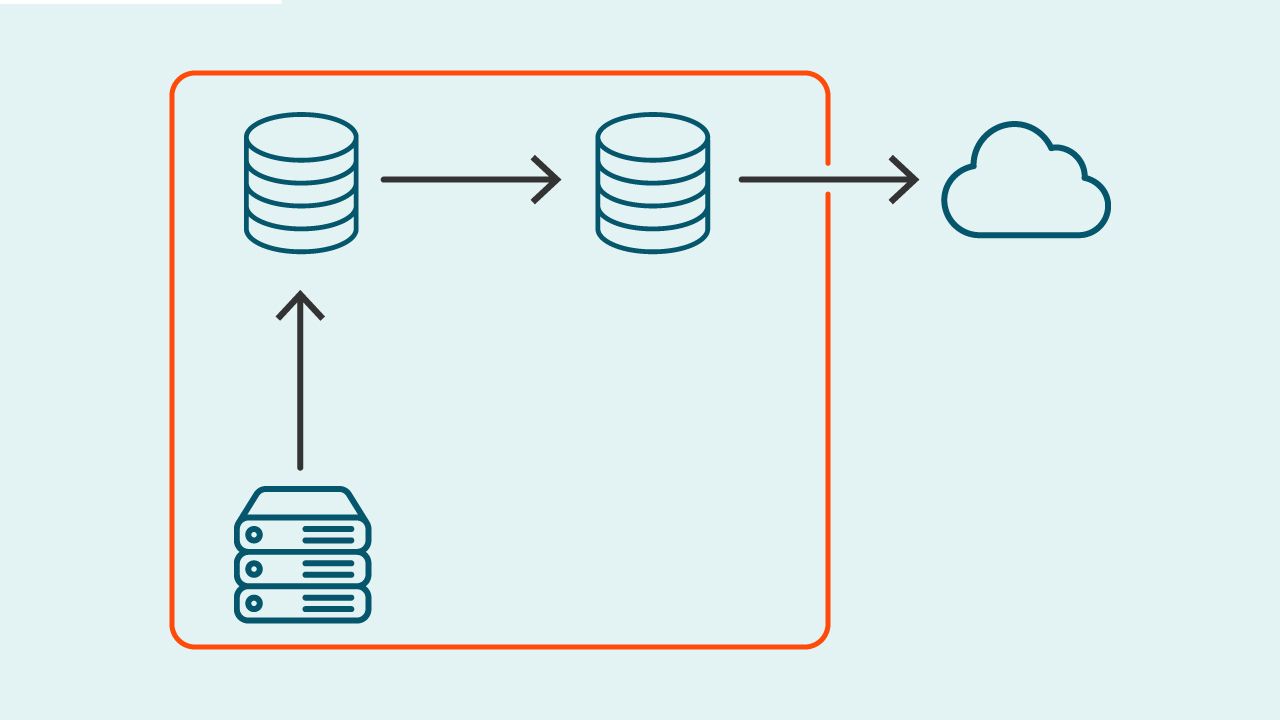

- Source vs. destination – Does the deduplication occur before it’s backed up to the cloud (source) or once it’s in the cloud (destination)?

- Job level vs. global – Is deduplication being done only for the data within a single backup job (e.g., a few VMs) or across all of your backup jobs (e.g., your entire enterprise)?

- Deduping SaaS data – Assuming your backup strategy includes your Microsoft 365 and Salesforce instances (and other key cloud applications), you should be deduplicating that data as well.

- Software-defined vs. appliance-based – While deduplication does exist in most backup solutions, many organizations want a solution dedicated to deduplication. Some utilize physical appliances, which then means you need to worry about hardware issues, refreshes, etc.

Data compression

Data compression is all about compressing the data to be backed up prior to sending it to the cloud. This reduces the amount of data moving between workloads and cloud backups. Due to the processor-intensive nature involved, latency is created, so balancing the desired compression ratio with the latency it creates during the backup and restore processes is necessary. A good compression ratio would be under 1:10, (compressed size versus original data sizes). While data types compress at different ratios, 1:12 would be considered an excellent compression ratio.

The very nature of compression being processor-intensive should raise the question of whether you should compress at all. The short answer is yes, with the caveat that you want the benefit of compression as long as it isn’t slowing down your backup and recovery.

Storage tiering

Data deduplication and data compression are great for both on premises and cloud sources and targets. Cloud storage, however, has another option you can take advantage of to reduce costs: storage tiering. Cloud storage providers offer multiple tiers of storage, usually sacrificing performance and speed of access for cost, with “colder” tiers being less costly per GB but much slower. When it comes to storage tiering, the best way to optimize backup costs in the cloud is with an automated, policy-based approach. This will help you move retained backups over time to colder tiers of storage, reducing costs.

There are other factors that come into play with each tier (e.g., availability, storage duration minimums, minimum storage charges per object). In the middle of a recovery, you want any factors that have to do with performance measured in minutes. So, if you use the “coldest” tier of storage on AWS, for example, the first byte latency (that is, how long you should expect before you get byte one of your backups to recover) is simply listed as “hours” – which means you may not see a recovery start for that long a period!

Cost versus speed: how to find the right balance

Now that we’ve gone over the backup data optimization strategies at your disposal, let’s get into next steps and key considerations for using each one. With the right approach, you can quickly recover data – while keeping backup data storage costs low.

Implementing tiered storage

You’ll want to align your data retention requirements for your backups with the most cost-effective storage tiers. Find out how many tiers your cloud storage provider offers, what the cost differences are, and what the first byte latency is. Then, map this to the workloads and operational data your backup data sets represent.

To maximize the benefits of tiered storage, data should be moved between tiers based on an established policy determined by you. For example, six-month-old backups don’t need to stay in more costly cloud storage. Your cloud provider may offer storage management and some enterprise backup solutions include tiered storage management capabilities as well. It’s also possible to “tier” on-premises backups and cloud backups. Your most recent backups remain on-prem for the fastest recovery (assuming we’re talking about on-prem workloads), with older backups being “tiered” (read: moved) to cloud-based storage.

Using data deduplication

While some backup solutions may include data deduplication or offer it as an add on, these options may come with limitations. For example, if your backup is deduping at a VM level, the best-case scenario is if you build, say, all 100 of your servers from the same base image, there would be a massive opportunity to dedupe all the commonality across them. But that’s not likely the case, so read the documentation to better understand at what level any built-in deduplication capabilities function – and how they are really going to benefit you.

Protect all your systems, applications and data.

You should take advantage of any deduplication functionality available to you, as long as it doesn’t negatively affect backup and recovery performance.

Compressing data

One thing to be aware of when using compression functionality in your backup and recovery solution is that it can be a CPU-intensive function. And while it may provide some help with compression, it may not yield the best results. Some backup solutions can increase CPU usage by 10 times just to achieve 10 percent more compression. While it’s still important to use the compression, realize you’re not going to get the full benefit of what can be accomplished today with backup solution-based compression.

Using all three data optimization strategies to achieve the best results

If you’re looking to dramatically reduce storage costs and accelerate backup and recovery, implementing all three data optimization methods is your best bet. The trick is to find a strategy that seamlessly works with your existing backup solution to handle the compression, deduplication and storage management. This approach will ensure your backup and recovery efforts are as efficient and accurate as possible. Investing in a third-party strategy will prevent you from having to add overhead to your backup solution, and it will quickly pay off, as you watch your storage costs decline. The right strategy should not only help with data optimization, but it should also provide immutable backups to help protect against ransomware.

The path to data optimization

Your backup and recovery efforts are inevitably going to become more taxing as data will continue to grow in the future. Because organizations will never have an unlimited budget for cloud storage, it’s necessary for IT to invest in data optimization methods that will increase the speed of backup and recovery while reducing the cost of retaining and maintaining the resultant backup data in the cloud. By optimizing backups using data deduplication, data compression and tiered storage, you’ll ensure you’re doing everything possible to keep storage costs low and backup and recovery speed high.