Protecting business-critical data can be daunting – from the volume of data you must protect, to the threats against it. But with the right data backup methods in place, you’ll ensure disaster recovery and IT resilience. So, let’s jump into the topic of data backup, why it’s important and which methods may work best for your organization.

What is data backup?

Data backup, simply put, is creating a copy of your digitized data and other business information. This provides an insurance policy against accidental changes, malicious attacks and man-made or natural disasters. You can use the backup copy to recover or restore your data for business continuity and disaster recovery.

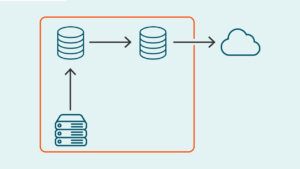

Many organizations create multiple data backups. They’ll keep one copy on premises for fast recovery and another copy offsite or in the cloud. That way, if the copy stored on premises is damaged, due to a flood, fire or other event, the second copy can be used in its place.

Why are data backup methods critical?

Failing to properly prepare for an event can cause irreversible damage, permanently stunting the growth and stability of a company. Your backups are your last line of defense and probably the only way to recover when data is changed, lost or damaged due to an unexpected event or malicious attack. Given that extreme weather events, cybercrime and other threats are all on the rise, data is more at risk than ever. That’s why it’s critical to understand the various data backup methods at your disposal.

So, let’s explore nine data backup methods every business should know.

1. Full backup

A full backup is the process of making at least one copy of all the data a business wishes to protect in a single backup operation. This method provides you with a complete copy of all your data, which simplifies version control and speeds recovery, as it’s always available.

One downside to this method is the amount of time it requires. While it’s generally considered the safest approach, as it ensures everything is backed up, it is also the slowest of the data backup methods, due to the amount of data that must be copied. Full backups also require the most storage space and network bandwidth. Technologies such as data deduplication and compression can help reduce space consumption and, in some cases, speed the full backup process. If a simple, quick recovery model is required and space considerations and backup speeds are not a problem, then recovering from a full backup will be very effective.

Keep in mind that it’s critical to ensure your full backup is encrypted; otherwise, your entire data estate is vulnerable.

2. Differential backup

A data backup that copies all files that have changed since the last full backup is known as a differential backup. Differential backups include any data that has been added or altered in any way. This approach does not copy all the data every time – only what’s different from the full backup.

Differential backups require less storage space than a full backup, which is beneficial from a cost perspective. But keep in mind that restoring differential backups is slower than restoring a full backup. They can also be more challenging to manage, as two files are required with differential backups. But using a differential backup will potentially provide a faster recovery time than incremental backups, though this will depend on what your data is stored on. Differential backups are a good way to simplify recovery with a reduced backup time window. However, each differential will grow as it gets closer to the next run time of a full backup.

Again, storage reduction technologies, like deduplication, can help as each differential will contain similar data to a previous one.

3. Incremental backup

Incremental backups always start with a full backup. Like a differential backup, an incremental backup only copies data that’s changed or been added since the prior backup was performed. But where a differential backup is based on changes to the last full backup, all incremental backups (after the initial one) are based on changes to the last incremental backup.

In general, incremental backups will require less space than both differential and full backups. To use the least amount of storage space, you can perform byte-level incremental backups, instead of block-level incremental backups.

Of all three data backup methods we’ve explored so far, incremental backups are slower to restore. They can also be more complicated to manage, as restoration requires all the files throughout the backup chain.

This method is good when you’re constrained by a tight backup window; it allows for a smaller amount of data to be captured and moved over an infrastructure to the desired target.

4. Synthetic full backups

Synthetic full backups are a great way to reduce the impact of long incremental chains without the impact of pulling all the data again as you would in a full backup. A synthetic full backup is created by using the previous full backup and the incremental backups to create a new full backup with all the incremental changes. This is a reset point for your incremental backup strategy. This method is sometimes called ‘incremental forever.’ A good solution would do the synthetic full creation at the backup storage layer. Synthetic full backups allow you to just have incremental data moving over your infrastructure without sacrificing the recovery time associated with very long incremental data chains.

5. File-level backup

While traditional file-level backup methods may have a reputation as being outdated, that is simply not the case. This data backup method can be a key component of a larger data protection and disaster recovery strategy.

With this approach, you select what you want to back up. A scan of the file system is performed and a copy of each dataset is made to a different destination. This backup is typically performed daily or on a similar schedule.

You don’t have to use only one of the data backup methods described so far. Most organizations will use some combination. That approach will help you reduce the risk of data loss. It also provides the added benefit of enabling you to achieve better recovery point objectives. This is a great way to work with larger unstructured datasets where they either reside on a physical machine or a very large virtual machine that cannot be backed up at a block level. File system backups have evolved to use heuristics and multi-streaming to deliver faster data movement for larger datasets over more capable infrastructures.

6. Image-level backup

If improving your recovery point objectives and achieving near-continuous data protection are your main goals, image-level backups are a great option. Sometimes referred to as infinite incremental backup, image-level backup performs multiple backups within a short window. This works well for data recovery, as you can get your system up quickly after a disaster, whether the OS is functioning or not. Image-level backups come in many flavors, from a volume on a physical machine to a complete image of a virtual machine. Image-level backups of virtual machines have become the most popular approach, reducing management overhead.

If you’re looking for a fast way to restore after a disaster and you have physical machines, you should consider a bare metal restore, which is another type of image-level backup. With this approach, you’re not only backing up data but also the OS, application and configuration settings, making it faster to rebuild and recover after an event. Often, bare metal recovery can be done to a virtual machine, aiding in disaster recovery where physical machine access could be very limited.

7. Continuous data protection

You’ve probably been hearing about continuous data protection (CDP), as it has grown in popularity recently. Businesses use this data backup method to speed recovery and hit more targeted recovery point objectives.

CDP captures any new or changed blocks of data at the sub-file level at very frequent intervals. You can restore a failed virtual machine or server in minutes or even seconds with some modern CDP approaches. That’s the case when restore points are created using a snapshot or volume filter device to track disk changes.

To ensure data integrity and prevent problems when performing a restore, these backups are application consistent. Validating the latest backup through an integrity check will ensure recoverability. CDP and ‘instant restore’ technologies are good for business-critical systems. This approach reduces the amount of potential data loss and it can speed recovery, reducing the cost impact of downtime for a business.

8. Array-based snapshots

Array-based snapshots are low impact and require less storage space than other data backup methods. You can recover volumes of data at a granular level quickly with this approach. After a base snapshot is taken of any data written to a volume, only incremental changes are captured in the following snapshots. This saves disk space and speeds local recovery. You can create many snapshots without setting aside extra disk space. The snapshots can all be scheduled at short intervals to meet your recovery point objectives.

Because they operate outside normal backup operations, snapshots can add complexity. Backup applications that complement array-based snapshots can help you overcome common challenges. Being able to generate, schedule and recover snapshots through the same UI makes this backup method easier to manage.

While storage snapshots are a good component of an overall recovery strategy, they still reside on primary storage and would be lost in a disaster. Either making copies of the snapshots or backing up the data to an alternative destination are still best practices.

Protect all your systems, applications and data.

9. Data deduplication

If data growth is taking a toll on your backup storage budget, data deduplication can help. Data deduplication finds and eliminates blocks of duplicated data and stores only the blocks that have changed since the prior backup. This dramatically reduces backup data storage requirements and costs. Deduplication solutions can also offer ‘offloading’ options providing source-side deduplication. This further reduces backup data traffic over an infrastructure and can reduce the backup time.

Adding a software-defined approach to data deduplication can complement your existing backup system. This will give you more flexibility for managing resources, automating provisioning and quickly allocating on-premises or cloud-based storage capacity. A software-defined approach can better meet new demands than hardware appliances and support a wider range of cloud providers, storage devices and virtualization platforms.

As data growth continues with no sign of slowing, data deduplication can help you keep backup storage costs under control.

Conclusion

Cyberattacks, natural disasters and even mundane hazards like human error put data at risk every day. It’s no longer a question of if an event will occur but when. Implementing the right backup and recovery methods at the right time will help protect your organization against growing threats that could cause irreversible damage. Immutable backups will further protect data against threats like ransomware. Ultimately, your backups are your last line of defense. Creating a data backup strategy built on the methods we’ve just explored is your best bet for keeping your data and your business safe.