Performance tuning a database is mostly a science, but with cloud vs. on-premises databases it’s more like an art. You have to take into account the type of organization, the individuals performing the tuning and the systems involved.

You also need to look at performance metrics. The metrics associated with cloud vs. on-premises databases take you even deeper. They depend on roles, activities and perspectives. Performance tuning has the same goal for cloud and on-premises databases, but there are big differences in the abstraction layers and services that segregate the platform offerings, and in the roles that define each performance model.

In this article, we’ll examine ten best practices to guide you in developing your own performance models for cloud and on-premises databases and evaluate how they impact performance.

Measuring performance in the cloud vs. on-premises

For on-premises systems, performance is a measure of all the physical components (hardware) and logical components (software stack) combined. In the distributed architecture that is typical on-premises, multiple servers and applications can affect performance. That’s why integration and implementation details are important for measuring performance effectively.

The impact of performance roles

Of course, the reason you need performance tuning is that you’re having performance issues: users are complaining, pages load slowly and reports take forever to run. On-premises, the problem usually looks different to almost everybody affected by poor performance: system administrators, database administrators, VM administrators, application developers, web administrators, cloud engineers, cloud administrators and so on. Naturally, your colleagues in each role tend to explore performance metrics only within their specialized area and scope of responsibility. Sometimes, though, determining root cause requires a collective effort.

But, when it comes to the performance roles in the cloud vs. on-premises, cloud providers usually employ performance engineers who work across teams and perform a use-case study of the environment. Besides identifying opportunities to use specific metrics for system-wide analysis, they have their eye on a better toolset for performance monitoring and they can plan capacity across the environment.

What is a performance model?

When you create a performance model, you define significant aspects of the way your system operates. That extends to the resources the system consumes and the metrics you’ll use to measure performance.

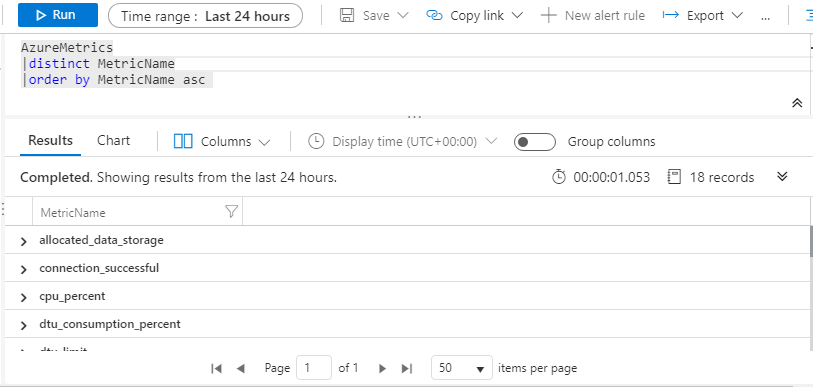

Consider a cloud database, for example, in Azure SQL, with the metrics bound to the cloud-managed services and resources. You can query the metrics using a Log Analytics query called Kusto Query Language:

AzureMetrics|distinct MetricName|order by MetricName asc

The results are shown in this image:

In fact, the results of the query include the following metrics, which can go into your performance model:

MetricName

- allocated_data_storage

- connection_successful

- cpu_percent

- dtu_consumption_percent

- dtu_limit

- dtu_used

- log_write_percent

- physical_data_read_percent

- sessions_percent

- sqlserver_process_core_percent

- sqlserver_process_memory_percent

- storage

- storage_percent

- tempdb_data_size

- tempdb_log_size

- tempdb_log_used_percent

- workers_percent

- xtp_storage_percent

Performance model best practices

Consider the following ten guidelines in developing your own performance model.

1. Set performance objectives or baselines

Which performance goals do you want your system to achieve? Define a baseline first.

On-premises, the product documentation from the vendor usually drives initial setup and configuration of your system. In the cloud, however, special tools are available for estimating the cost of database performance tuning:

2. Prototype software or hardware

Smart administrators use a prototype to build an intuitive database system that serves the workload and meets the needs of users.

When it comes to performance issues in cloud vs. on-premises systems, most database professionals are unsure of where to begin the analysis, which data to collect and how to analyze the data they do collect. As noted above, performance issues can arise from anywhere, including software, hardware and any dependent component along the way.

A typical prototyping decision, for example, would compare the Database Transaction Unit (DTU)-based model, with its simple, pre-configured resource options, versus the vCore-based model, which provides more flexibility and control over the resource.

3. Analyze workload performance

In this phase, you want to capture and interpret the raw performance metrics or the consolidated data using several methodologies that provide deep insights into the database. This provides the direction needed to ensure high-performance database operations while highlighting the specific areas where optimization may be needed.

There are three levels to this approach:

- Basic or purchasing models, key performance concepts and perspectives

- Analysis of experimental versus observational results

- Statistics, real-time monitoring and data visualizations

4. Test software builds for performance, before and after release

Perform non-regression testing on the builds to confirm that a change to software or hardware does not impair system performance. This is more common in cloud vs. on-premises environments.

5. Benchmark/baseline the software releases

Using test cases to set benchmarks and baseline metrics helps with troubleshooting the root causes of bugs in both software and hardware.

The Azure SQL DTU model, for instance, is a combination of CPU, I/O and memory (RAM), based on a benchmark workload called ASDB (Azure SQL Database Benchmark). The benchmark measures the actual throughput based on the database workload.

6. Run a proof of concept

Applying a proof of concept in the target environment allows you to measure real-world system performance.

Consider a team of administrators who move an on-premises server to an Azure Cloud IaaS server, then promptly see that I/O latency spikes and reports do not run as expected. After analysis, they determine that the disk layout was specified as HDD, which sufficed for development and testing but not for production. Upgrading to solid-state drives (SSD) resolved the performance problem.

7. Choose the right service tier

When comparing cloud vs. on-premises scenarios, on-premises I/O subsystems are very different from cloud operating service tiers. Choosing the right service tier plays a major role in database performance.

8. Use tools to monitor performance

The monitoring system provides insight into current and historical performance.

Suppose your on-premises SQL Server shows 80% utilization for disks, and even higher utilization for other resources. If you want to examine historical performance, you may need a third-party monitoring tool because on-premises SQL Server does not include built-in tools to capture history. You could use built-in tools like Dynamic Management Views (DMV), Extended Events and Perfmon, or build custom solutions around them, but that’s more work.

On the other hand, performance monitoring solutions for cloud computing are more robust. As described above, the Log Analytics feature in Azure SQL offers intelligent insights for solving most performance issues. In AWS, the CloudWatch feature is analogous to Log Analytics. In Google Cloud, Operations (formerly Stackdriver) offers cloud monitoring.

Using those kinds of high-quality database performance monitoring tools, you can more easily spot problems before they affect your users. Apart from those, there are many database performance monitoring solutions suited to both cloud and on-premises databases and database servers.

9. Document analysis of performance issues

It is important to document the performance tuning steps that resolve any particular performance issue. In that regard, there is no difference between cloud vs. on-premises databases. Adequate documentation includes a drill-down analysis of the server and client software, including screenshots, scripts and metrics.

10. Execute performance tuning in the cloud vs. on-premises

The rise of cloud database adoption solves some performance problems while introducing others.

The cloud is commonly built upon virtualization technologies, allowing multiple operating system instances or tenants to share one physical server. That means that there can be resource contention from other operating systems. The trick lies in isolating the performance effects of each tenant and determining when poor performance is caused by other tenants.

Cloud vs. on-premises performance considerations

Developing your performance model around these practices ensures better outcomes, whether your databases run on-premises or in the cloud.

Here’s a look at some of the performance areas to consider when comparing cloud vs. on-premises database systems.

| Category | Cloud | On-premises |

| Performance | Directly proportional to service tiers | Directly proportional to the underlying infrastructure |

| Scalable architecture | Scale-up and scale-down (dynamic horizontal scaling) | Scale-up (static) vertical scaling |

| Capacity planning | Dynamic and fast | Multiple layers of approvals needed and the process can be slow |

| Storage | Blob, file share, block storage, object store | Utilizes in-house hardware and software |

| Resource management | Dynamic | Static |

| Maintenance | Less overhead (based on IaaS, PaaS database model) | More overhead |

| Monitoring tools | Centralized monitoring is often built in | Central monitoring is not built in |

| Integration and Deployment | Fairly easy | Not simple |

There are stark differences between cloud and on-premises database environments. With the cloud, you could rent environments at any scale for long enough to perform a benchmark and baseline test. However, this sort of flexibility is not available in on-premises environments.

Cloud platforms allow you to simulate the model with real data and predict performance. You can easily characterize, simulate and test workloads on cloud platforms of different scale, price, architecture, service tier, multitenancy, capacity planning and storage.

In the cloud, distributed systems can be easily integrated and tested. Cloud architectures enable you to adjust and balance the throughput, latency and input/output operations per second (IOPS) of VM disks by choosing the right VM size and storage tiers. In general, larger and more expensive VMs offer guaranteed maximum IOPS and high throughput. Like pricing, performance is based on basic vs. standard vs. premium storage, and HDD vs. SSD.

The cloud approach is not always a guarantee of better performance. In some scenarios, cloud database performance may be worse than on-premises database performance, especially with a basic- or standard-tier model.

Is there a big difference between performance tuning in the cloud vs. on-premises? The most significant difference is in the adoption of the platforms. The scope, platform and roles ultimately define the relative performance of the systems, and can impact how often you need to tune, but not necessarily how you tune.