Data marketplaces play a key role in the evolving landscape of leveraging high-quality trusted data and AI within organizations. Instead of navigating an IT development process, manually tracking down useful data or models, or asking around individually for context for data and AI model owners to find and obtain quality data for analysis, business analysts and data scientists can rely on data marketplaces as a trusted and informative source of data available for self-service. While data marketplaces are an ideal scenario for organizations looking to maximize the value of their data, there are crucial components organizations need to have in place for one to be successful.

What is a data marketplace?

A data marketplace allows data consumers to easily find, understand, compare and rapidly gain access to governed and authenticated data products, datasets and AI models for analysis and enterprise use – all in one place.

Data marketplaces can be internally focused or externally focused for an organization. Internal data marketplaces provide an efficient way for organizations to democratize high-value, trusted data, deliver self-service enablement for consumers, and ensure that governance is in place for data use. Organizational data marketplaces can contain internally developed and packaged data, 3rd party data purchased for enterprise use or a combination of both. External data marketplaces are adopted by enterprises to offer available data products to customers and partners outside of the organization.

Regardless of use case, the primary mission of a data marketplace is to offer up self-service data products, datasets and AI models that are governed, authenticated and ready to use for analysis and other business purposes.

Why organizations are moving towards data marketplaces

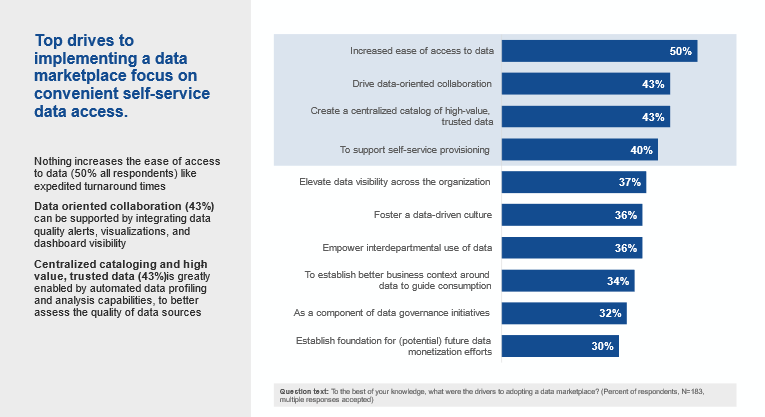

Data marketplaces are increasingly being leveraged by organizations to increase employee productivity and innovation through providing more self-service in relation to data and analytics. Recent State of Data Intelligence research found that of organizations that implemented data marketplaces, the top four drivers included increasing ease of access to data [50%], driving data-oriented collaboration [43%], creating a centralized catalog of high-value, trusted data [43%] and supporting self-service provisioning [40%].

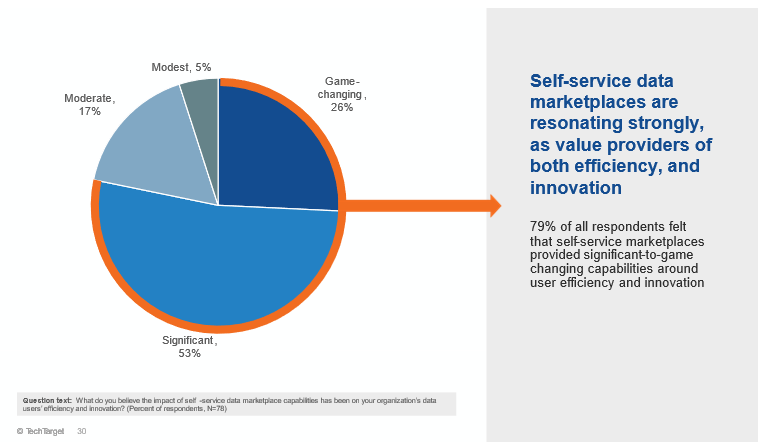

And according to the research, 79 percent of respondents implementing data marketplaces are experiencing a significant-to-game changing impact on data user efficiency and innovation.

But, practically, how does the above translate into how an individual data and analytics user would leverage a data marketplace? Imagine without knowing specifics on who to ask, or what exactly to ask for, a data analyst could go to one online location to identify the high-quality, governed data products that might help with these or other scenarios:

- I’m researching branch location data to ensure my ATM’s are in the right place.

- I’m looking for correlations between store locations and profitability.

- I’m running a new clothing line campaign and I need geographical, sales history and demographic data for this specified region.

- The PI rules have changed in the state of Georgia and I’m not sure if we have pinned and associated the external facing data to the new state ordinance.

- What is the make-up of our energy footprint in EMEA?

Similar to a consumer-like online shopping experience, an analyst can filter and sort across the online catalog of available data products to identify potential data product fits for their needs. The resulting data products could include one or many internal datasets, external demographic data, sales history reports and maybe even AI models representing buyer history insights. The analyst can then use side-by-side comparison capabilities to further size up the best fitting asset, considering past consumer reviews and additional asset descriptions, and leverage other data literacy aids to identify data sourcing or lineage, business context and governance guidance. Once identifying the best fit for the analyst’s purpose, the analyst can instantly initiate an access request and automated governance workflows will ensure the appropriate oversight, documentation and expedited delivery.

Key benefits of data marketplaces

Data value scoring that offers credibility to data value

When low quality data is used for analysis, it results in less than satisfactory insights. Data value scoring measures the value of data to identify data that can be trusted with the aim of making it easier for consumers to find and leverage high-value trusted data faster within the organization available for use on the marketplace. It can also lay the foundation for monetization of data.

There are a number of parameters organizations can use to score data. However, I personally like the following elements Douglas Laney outlines in his book Infonomics: How to monetize, manage and measure information as a competitive advantage.

- DQ Score: Profiles results of data quality

- Relevance: Associated critical business procedures

- Timeliness: How often data is updated

- Scarcity: An organization’s “secret sauce”, considered to be an organization’s unique, highly coveted data

- Lifecycle: How long will the data be useful

- Criticality: Number of common data elements

- Certification: Completeness

- User Ratings: How users rate the data on a scale of five stars

- Popularity: How frequently a dataset is used

- Data Score: A combination of one or more facets (profiling results, end user ratings and how well the data is curated) that algorithmically categorizes the data into gold, silver or bronze ratings based on parameters

Measuring data value manually is a hassle. Automated data value scoring makes rating data value practical and achievable when available within a data marketplace supported by data intelligence. For example, automated data value scoring could take into account data quality scores, how well the dataset, data product or AI model has been governed and curated, and ratings given by past users to validate its usefulness. Additionally, organizations could weigh each of those factors in determining a data value score to line up with their unique view of data value.

Support for both internal and third party data

Across organizations, data marketplaces can look very different. Some only include governed internal data. Others have access to live, ready-to-use data with access to over 2000 data providers of external third-party datasets. Some offer “clean rooms” that offer the highest caliber of governance applied for trusted redistribution.

Beyond easing discovery for data consumers and the inherent implied validation offered in housing it in an organization’s own data marketplace, managing third party data within a data marketplace offers some additional practical benefits.

Third-party data subscriptions can be expensive and housing all within one data marketplace lessens the likelihood of duplicate purchasing across an organization. Additionally, housing 3rd party data within your own data marketplace allows you to ensure your unique data governance protocols and processes are followed, and enables you to similarly take advantage of all of the data value scoring, user ratings feedback and other built-in data marketplace capabilities to ensure high-value, trusted data is utilized.

Faster time to data value

A model to marketplace approach to creating a product can automate the development cycle. It shouldn’t take a long software development lifecycle to request a data product. Rather than communicating and refining requirements from business to analyst, to designer, to developer to DBA to fulfill a data need, analytics users with a data modeling environment incorporated with a data marketplace can have a one-stop shop to first evaluate if the data products available will fulfill their needs or, second, use built-in automation to request a new data product and gain delivery. Integration of data modeling and data catalog automation with data marketplace capabilities can offer an efficient model to marketplace approach to shorten the development and delivery process, speeding up time to data.

Monitoring AI data and model drift

AI can be a black box, and transparency, business curation and observation are how organizations can fight against that black box. For organizations to trust the insights gleaned from an AI model, a base understanding around the intent of the model, the business problem the model is solving, the critical decisions being made from a model is necessary to keep the model in check and focused on its original purpose.

Data lineage combined with data profiling from a data marketplace offers an inside look into where data is coming from and the profiling results, think red, yellow, green, offers organizations transparency. Organizations can additionally monitor AI models for drift and bias by tying in data observability or continuous data monitoring capabilities tied to data quality software and get alerted when data starts to first drift outside of acceptable parameters. By bringing together automated data catalog, data quality, and data marketplace capabilities, organizations can harmonize the entire use case from business intent, to applying it to a data product request, to observing the drift and sharing the results across departments and business units in order to quickly address any needed corrections.

Crucial components necessary to drive data marketplace success

Start with business value in mind

The overall goal of a data marketplace is to have quality data available for users to develop insights for the benefit of an organization. Aiming for a few key intended use cases and business outcomes serves as a guiding light when implementing a data marketplace.

Ensure a solid data management foundation is in place

If you have invested in data governance, data design and data management you expect intelligent, all-inclusive highly trusted business insights. That’s the goal, right? However, getting good results from a data marketplace will depend on three things:

- Data Discovery: Can users quickly find the datasets and assets to solve their challenges?

- Data Quality: Can users trust that their analysis will be based on well described, vetted, profiled data?

- Data Literacy: Will users have context to know what a set of data is, how it’s used and the guardrails to ensure accuracy?

It’s easy for organizations to say that somebody owns this data and it’s well curated. But has critical data been profiled? Is a data lineage in place? Are there guardrails around how select data should be used? How do end users feel about a data set?

Deriving useful and applicable analysis all starts with having a solid data management foundation.

Benchmark the growth and maturity of organizational data

Continuously benchmarking the growth and maturity of an organization’s ability to fully leverage the value of their data through value use cases in a seven-step data maturity program allows teams to deliver a return on their data marketplace efforts early and often. Some examples to start with could include highlighting where organizations have leaking PII data, identifying data bloat, and root cause analysis and remediation for select issues.

Conclusion

As organizations venture into the world of data marketplaces, aligning use cases, people, processes and technology is imperative. Stay use case driven. This approach sets the stage for a data marketplace that not only facilitates greater, but governed access to high-value, trusted data, but the valuable insights for businesses to thrive in an increasingly data-centric landscape.