As everyone has seen in the news, artificial intelligence (AI) is going to change the world. There’s a lot of hype around it, but under that guise of hype and wonder is real technology that is adapting fast. It’s adapting so fast that governments and even the large tech corporations that built it are not able to regulate it. Its move to open source, where its free for all to add, adapt and use, caught everyone off guard. Hackers and bad actors are very adaptive and creative at using new technology to advance their own agendas, especially when it can make them much more efficient and effective.

Regulations are on the rise

The good news is, people like Geoffrey Hinton, “God Father of AI,” and Sam Altman, “Founder of OpenAI,” have warned of the risks of AI, and the world has taken notice. Governments like the U.S. and European Union are trying to regulate it and quickly proposed regulation to put some safeguards in place through the U.S. AI Bill or Rights and EU AI Act. Corporations such as Apple, Wells Fargo, Amazon and others are limiting its use until it can responsibly and safely use the technology. That is all headed in the right direction. But, for those who are not yet limiting use, AI in the workplace may pose serious security risks.

What AI security risks and challenges do we face?

Hackers and weaponization

Unfortunately, we all live in the real world, and we know that all that regulation and built-in safety protections only applies to the good guys. The hackers of the world have no constraints. They are free to take open-source technology and build on it. Projects such as AutoGPT are already leveraging ChatGPT to complete a string of tasks that can be chained together. Tasks such as running commands, executing scripts, modifying, debugging and compiling code. AutoGPT figures out the complex steps needed and takes action on them recursively until they are complete, adapting each step until it can complete its task.

It’s not much of a leap that hackers use projects such as AutoGPT, along with Generative AI (Large Language Models – LLMs such as ChatGPT), and open-source hacking software such as Mimikatz, Rubeus, PowerSploit to weaponize AI and take over corporations or high value government facilities.

Microsoft’s First Chief Scientific Officer, Eric Horvitz, acknowledges this by warning of AI weaponization and saying, “There will always be bad actors and competitors and adversaries harnessing [A.I.] as weapons.” NSA Director Rob Joyce says “Buckle up,” as he explains that next year “we’ll have a bunch of examples of where [A.I. has] been weaponized, where it’s been used, and where it’s succeeded.”

Bypassing OpenAI

You might think that OpenAI won’t allow ChatGPT to be used for hacking. That is mostly correct. OpenAI has put in safeguards to prevent ChatGPT from doing anything malicious. Unfortunately, researchers have found a way to bypass all of them. One way is using prompt engineering to jailbreak ChatGPT and have it work in DAN mode, which stands for “Do Anything Now.” There is also a plethora of open source LLMs that have been scaled down to run locally on servers, which hackers can deploy, bypassing any need to jailbreak a large commercial offering.

AI hacker bots, ransomware as a service and custom-generated malware

Right now, Hackers (Red teams) are creating AI hacker bots to execute social engineering attacks, break into networks, scour code looking for places to attack, automate zero day vulnerabilities, open back doors and cover up their tracks in real time to make detection extremely difficult. These attacks will be able to be sped up or slowed down to blend in with regular user behaviors bypassing UEBA threat detection. Soon (if not already), hackers bots will be working 24×7 to find a way in and then escalate their privileges through various attack paths in Active Directory. Once they can do that, they can take over the domain and hold your company for ransom, destroy your servers to cause havoc, or steal your intellectual property. The Defenders (Blue teams) will need to respond in real time, leveraging all available tech to detect, stop and close any loopholes hackers have exploited.

This is in addition to the worrying trends of Ransomware as a Service (RaaS) and ability for generative AI to create 80% of the code to create custom malware. The entry into hacking has never been easier, and this all adds up to increased attacks on your company.

Ways to combat AI security risks

Protecting against AI-generated security risks requires a multi-layered and proactive defense approach. By implementing a combination of network security, application and data layer protection, endpoint security, identity management and SIEM systems, organizations can enhance their security posture and effectively combat evolving threats from AI. Timely detection and response are critical in minimizing the damage caused by hackers within the network. So, staying vigilant, updating security measures regularly, and adapting to the changing threat landscape will help to safeguard your environment.

Below are some key measures to consider when combatting the security risks of AI.

Network layer protection

Implement robust network security measures such as firewalls and intrusion detection systems (IDS). These solutions help monitor and filter network traffic, identifying and blocking suspicious activities.

Application and data layer security

Ensure comprehensive logging of events at the application and data layers. This includes monitoring and analyzing system logs, application logs, and database logs to detect any anomalous behavior or unauthorized access attempts.

Endpoint protection

Adopt advanced endpoint security and management solutions that go beyond traditional antivirus and malware detection. These solutions should include features such as behavior-based analysis, threat intelligence integration, and proactive patch management to protect endpoints from emerging threats like zero-day exploits.

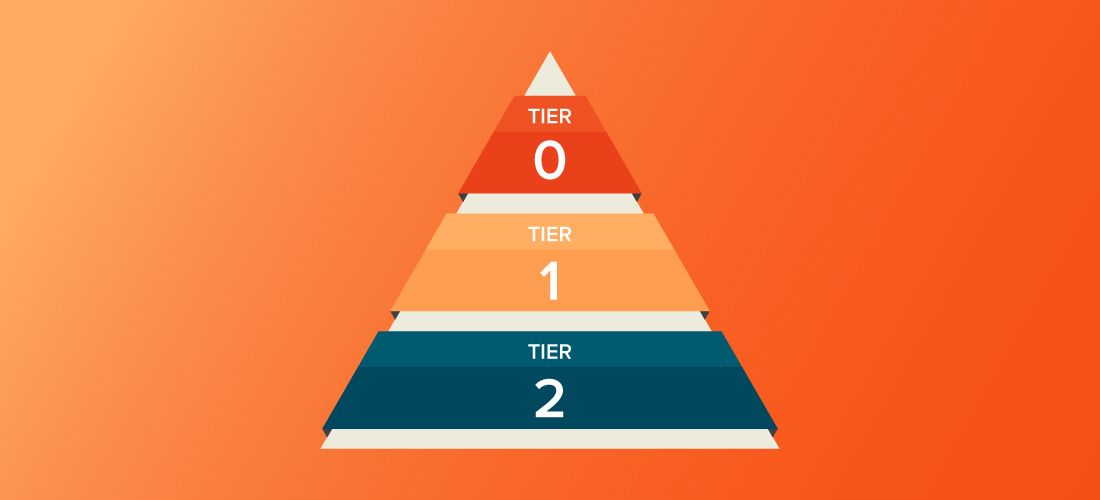

Identity management

Establish strong identity management practices, specifically focusing on securing Active Directory (AD) and Azure AD. Implement robust access controls, multi-factor authentication, regular security audits and change control around GPOs and critical Tier Zero assets to minimize the risk of unauthorized access and privilege escalation. In addition, ensure you have a solid backup and recovery solution and plan to ensure you can recover quickly if the unthinkable happens.

Security Event and Incident Management (SIEM)

Deploy a SIEM system with advanced threat detection capabilities. This system should be capable of correlating events and logs from various systems and applications, enabling proactive threat hunting, alerting, and rapid incident response.

Timely response

Recognize that the timeframe for attacks is shrinking rapidly, with hackers spending only hours or days inside a network before achieving their goals. Therefore, organizations must prioritize swift incident response and take proactive measures to minimize the impact of breaches.

AI security risks will evolve

As with any new technology, the advent of AI brings both challenges and benefits. AI security risks are just on the forefront and will continue to evolve and escalate as has the overall cyber threat landscape.

To put this in perspective, Microsoft recently did a large study and found that there is a .05% chance that an employee in your organization is hacked on a monthly basis. This means that out of every 1,000 people in your organization, five of them will be compromised each and every month. The present and future requires comprehensive defense strategies for cyber risk management and proactive approaches to mitigate threats.

Is your network safe? Are you ready for the onslaught of hackers running 24×7 trying to find away in? These and many more burning questions will be ones IT teams around the world will be asking themselves as we all navigate the evolving landscape of AI.