As your organization broadens its data protection strategy, a smart move is to invest in secondary storage. A comprehensive approach includes software that can reduce your overall storage requirements through compression and deduplication.

In this post you’ll find information about the differences between primary and secondary storage and guidelines you can use to apply best secondary storage practices in your own organization.

What is secondary storage? Why is it necessary?

Think of secondary storage as a place for data that is not likely to be accessed frequently. Backup storage is a good example because it’s unlikely you’ll need to access it unless your primary, production data is lost. That could happen through human activity – whether malicious or accidental – or through a natural disaster or a hardware failure.

Your secondary storage sits outside the boundaries of normal production usage, and you access it only when necessary. That could be the result of malware, viruses or ransomware. Or a user could submit a trouble ticket reading, “I’ve deleted all of the data by mistake” or “I’ve dropped a database and I shouldn’t have. I need to restore it.” It falls to you to restore a copy of the lost data.

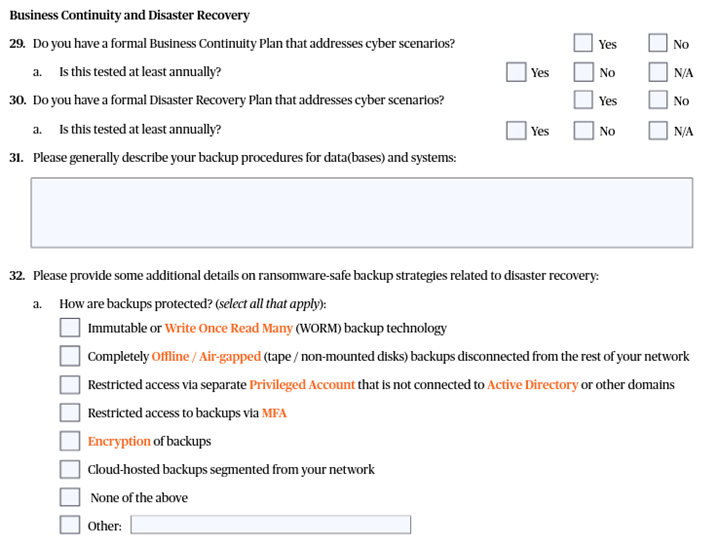

It’s like an operational insurance policy, assuring you of business resilience, business continuity, disaster recovery and ransomware recovery. In fact, in an age of rampant ransomware, business insurance policies are now insisting on certain criteria being met. A combination of secondary storage and immutable backup to ensure you have a copy of your data that can’t be changed, encrypted or deleted is now becoming a mainstream requirement to have the business insured at a reasonable cost. Add in zero trust, multi-factor authentication and 3-2-1 backups as requirements too, it quickly becomes clear that a capable solution and a clear set of policies have to be in place. For example, you may have to declare what your backup solution is capable of delivering. How you answer is likely going to affect your premium.

Common options for secondary storage

Naturally, most primary storage uses the highest-performing devices like solid-state drives (SSD) and flash drives. That ensures the shortest possible time to both save and retrieve data.

In most secondary scenarios, backup software schedules the copy of given files, folders, virtual machines etc. from primary storage to secondary; therefore, speed is still a consideration, but less than of an expectation compared to primary storage being used, for example, by databases. The tolerance for lower performance opens up other storage options:

- Hard drives – Traditional disks and arrays are suitable. That may extend to solid state disks.

- Flash storage – This is becoming more popular as prices fall. As an investment in business improvement around resilience, flash can bring high performance with faster backup and restore operations.

- Tape – The war horse of data protection, tape is enjoying a resurgence because it is a practical, air-gapped backup solution. When you back up to tape, remove the tape from the library and place it in a vault, you form a true air gap that no attacker can jump. Tapes do, however, need to be stored properly to preserve them from loss, breakage, deterioration and environmental damage.

- Cloud – A textbook example of off-site storage, the cloud can play a valuable role in your organization’s 3-2-1 backup scheme. Cloud storage is a popular, stable option because you can put your backups there and be confident that they’ll still be available years from now. Plus, cloud service providers offer cost-efficient tiers inside the cloud, depending on how long the data will be there and how often you’re likely to access it. Over time, a given file can move from local, primary storage to less expensive, secondary storage, then on to cloud storage. Then, if you still haven’t accessed it, the cloud provider can automatically move it to progressively less expensive tiers.

The difference between primary vs. secondary storage

Primary storage

Primary storage is used continuously by enterprise users day in and day out for productivity, applications and commerce. Because of its high performance and low latency, it’s valued for business-critical operations.

- It makes up virtual infrastructure, with all the virtual machines running on it.

- It can form part of an organization’s network-attached storage (NAS), where users save their files and folders.

- IT can build it into a storage area network (SAN) comprising multiple chunks of storage and attached hosts.

- In the context of the cloud, it can be classed as primary storage if you’re running or creating an application in the cloud. The storage used as an application service could in effect be considered primary because of its importance. Primary storage should be viewed based on what it is serving to the application consumers and its importance to the business in general.

Secondary Storage

Secondary storage is where you’ve copied the data that you may need to recover from primary storage. It’s your backup – not your primary, production data – so the storage can be fundamentally different in size, scale and performance.

Note that, although secondary storage may keep you from losing money, it does not make you any money. That’s why low cost is usually the prime motivator when businesses shop for it. However, one of its most overlooked yet desirable characteristics is that it is capable of receiving huge amounts of data from primary storage quickly. Speed matters because of the physical limits on network throughput (and on everyone’s patience) when a backup takes too long.

From the perspective of users, secondary storage is dead storage that they no longer access. But from the perspective of the business, it’s needed for resilience and continuity. And from the perspective of regulators, it can be a requirement.

Optimizing secondary storage

Given that secondary storage is necessary but of lower priority than primary storage, smart backup administrators try to optimize it any way they can. That includes investing in techniques like deduplication and compression to reduce the quantity of backup data moving across the network and being stored. When those techniques are software-defined, they can complement a wide variety of backup hardware and improve the performance of even low-end hardware.

Backup administrators constantly face a cost-performance trade-off when they invest in any storage software or hardware. The efficiency requirement for secondary storage is just as stringent as it is for primary storage, but admins want to meet that requirement while spending less money on secondary storage than on primary storage.

In fact, it’s important for your primary and secondary solutions to remain on par with each other, or at least stay in the same ballpark.

If primary and secondary storage gets too far out of sync, it can lead to reduced performance.

When you dive deeper into the actual issue of what caused that reduced performance, organizations will grow their production environment without modifying their backup configurations. If your production environment has grown by a factor of 10 in three years while leaving secondary backup storage unchanged to accommodate that increase, no wonder there was reduced performance!

In other words, everything that goes around backup and storage has to keep up with everything else going on in your business. If primary storage usage grows, your secondary usage will have to grow as well. Hence the appeal of techniques like compression and deduplication, which can help reduce the impact of growth on backup performance.

Probably the most prudent course of action I’ve ever encountered involves change control. One of our customers established the policy that no new data or application could be deployed unless the requesting manager first demonstrated how it would be backed up. Industry regulations required that they have a backup, so they made it a condition of deployment. Before making any change in their infrastructure, they would ask, “If we do that, can we back it up?” If the answer was not “yes,” then they’d wait until the business had that capability: more storage, better performance, another machine to host the backup software, whatever it took.

In short, your secondary storage should be good enough to meet all your needs for size (capacity) and speed (throughput).

Secondary storage best practices for architecture and management

Overall secondary storage architecture

When you’re defining your architecture, secondary storage can become part of your 3-2-1 scheme for keeping another copy of the data, as described above. Do you copy your backup from primary storage to another location? Do you need a rack in a colocation center? Do you want to run backup in the cloud and make the copy there?

Deduplication and compression

Techniques like deduplication and compression will allow your solution to grow along with your business. A sound approach is to incorporate them as software-defined components.

Repetition and retention

Along with the question of speed and size of your backup operation, you’ll focus on the repetition of backup – how often you save – and the retention of backed-up data – how long you’ll keep it. Some of the specific repetition and retention parameters will have to be determined either by compliance or by business needs.

Zero trust

And finally, because cyberattacks and ransomware are always unwelcome guests in IT planning, zero trust is a useful practice in secondary storage architecture as it is with anything else. With zero trust in mind, you should use different privilege management systems for production and for backup.

Why? To ensure that a breach or compromise of one does not affect access to the other. If, for example, your production environment depends on Active Directory, you can segregate access to your backups with separate authentication. It imposes another obstacle to attackers.

The most common mistakes organizations make when using secondary storage

The most common mistakes involve performance estimates. Many backup administrators underestimate their performance requirements or set an impossibly short backup window, as in “I want to cut my backup time by half.”

The problem, of course, is that that may not be possible. The satisfactory performance of secondary storage depends on the performance of primary storage. If you overestimate the abilities of your current infrastructure and expect high performance from a storage target unsuited to the task, you’re likely to assign blame to the wrong culprit.

The main mistake looks like an attempt to achieve high performance on incorrect target architecture, but if you scratch a bit deeper, you’ll discover a problematic mindset at work. Naturally, IT professionals want to use products they know; who wouldn’t? But to achieve a performance goal on a limited budget they may need to change their mindset and budge from their comfort zone. Organizations may need to think differently about their target architecture.

Conclusion

As in most IT projects, planning your secondary storage and backup solution is a matter of balancing business risk against cost. How much is it worth?

At the extreme, you can build a system to look for changed data every millisecond and immediately write it to storage. And, you can keep every change in the history of the data for multiple years. Will that make your business resilient? Yes. Will it be cheap? No.

Protect all your systems, applications and data.

The longer the gaps in backup repetition (RPO) and the shorter your desired data retention, the cheaper your solution. The essential question is “How much data can we afford to lose between backup gaps, and how much is it going to cost me?” The point at which those lines cross is your ideal situation.

You can look at your budget and say, “If that’s how much we can spend, then we’ll have to adjust the repetition. We may have to use deduplication and compression to lower our storage cost. That’s what it will take to meet the constraints of our backup window.”

Keep an eye on your projected data growth and avoid deploying a solution that addresses only this year’s time constraints and business requirements.

The optimal architecture for secondary storage accommodates growth.