Organizations with mature data environments make it easy for internal users to access and leverage data for value. There is an intelligent structure built into designing, categorizing, finding, curating and delivering data. And with a great data leader and team, there is value found at each step of the structure.

However, for less data-mature organizations, challenges arise in finding value in their data intelligence journey. This results in a lack of available, trustworthy data and minimum insights where businesses can take action.

When re-distributing data to the masses, the data mesh and self-service environments have one paramount concern: how good is this data? A mature data environment is hallmarked by a confidence in data that has been governed, profiled, curated and scored that offers a self-service marketplace to users.

What is data maturity?

Data maturity measures an organization’s level and effectiveness of using their data to drive decision-making.

What does good data maturity look like?

A mature data environment allows users to easily shop for datasets according to the business question, campaign or project they are working on. There is a “known quality” to the data whether it is reflected in data quality profiling results, end user rankings, a governance rating or a combination of these quality facets.

The data is searched for, and represented, according to the business unit and domain. We are governing where the data is housed. It’s clear when and who last updated the data, policy, business rule and more.

The data itself is governed, classified and tagged according to how it is to be handled. The users will know if they are using a dataset with privacy or regulatory controls associated at the column level of data.

Data is easily shared. There is transparency forward, backwards and everywhere in between. Data engineers and analysts can follow the lineage of the data, where the data originated and what happened to the data, how it was calculated, and what rules were applied. If there is an issue anywhere along the data pipeline, one is able to trace quickly upstream and downstream to understand the scope of the issue and provide the root cause analysis needed to fix long-standing data issues at the source.

There are KPI’s and metrics around data assurance. The organization is measuring things like how much time is spent wrangling the data versus working with the insights, and how complete and well curated the data is. The organization has invested in a CDO or CDAO, and everyone participates and interacts with the data, it is not left to one pocket of the organization to master and manage. These are all characteristics of a world-class data program and a mature data environment. Massive redundancy and data bloat issues have been remediated making it easy to find and use data at its fullest potential.

Why is data maturity important?

Data done right is an organization’s top business asset. Looking at the data, an organization can tell when something is working or not working and use the data to pivot or disrupt.

In the era of AI and digitization, we cannot rely on our experience and our guts. We need prompts and indicators that catch when data drifts. We need data, and data literate employees, to act on creating, tweaking, streamlining processes, campaigns, training, trends and customer satisfaction based on mature, trusted, well-curated data.

Without this we are just guessing—or worst case—acting on data that has not been well vetted or verified.

When data is not easily explained, we are slow to respond to an audit, hire teams of consultants to figure it out, and continue to derail other projects to answer the latest data escalations. Lines of business are left to their own devices to find their data and spin up yet another data lake, warehouse or BI environment to further proliferate the data ocean and the risk of not getting it right.

There is an ocean of data in every organization and left unmanaged, contamination—or incorrect usage—can move across the organization causing yet another breach of privacy or misrepresentation of business assets, calculations, policies and rules.

Bringing data maturity to an organization

At the onset of any data initiative, it is good to get a baseline on where the organization is with the maturity of their data. Offering a survey is a great way to calculate that baseline.

Beyond that, data leaders looking to bring data maturity to their organizations face unique challenges with supporting data at scale. Experienced data leaders draw on their past experiences in supporting traditional data governance, compliance, risk and privacy information to modern disruptive use cases in the analytical environment. This experience drives data leaders to begin their data maturity programs with purpose and intention.

Kevin Smith, CDO of Tokio Marine and team began their journey with the following mission statement:

“The Data Office’s vision is to make the data that is critical to our business; trustworthy, accessible, and secure whilst maintaining our agility and innovative spirit. We will build a highly literate data community who will build, support, and promote this vision.”

It is my opinion that having a strong well championed vision like this tied to a “Data Assurance” program leads data maturity programs to success. It brings everyone to the table with a “deliberate” call to action that aligns with the overall calling.

Highly effective data leaders know how to set the table and stand confidently behind their data because they have a well-structured program and an internal and external team of SMEs that align with the overall mission. The give and take of this relationship between internal team members and external vendors strengthens data programs by encouraging and future-proofing for the overall pursuit of maturing and “freeing” data across an organization.

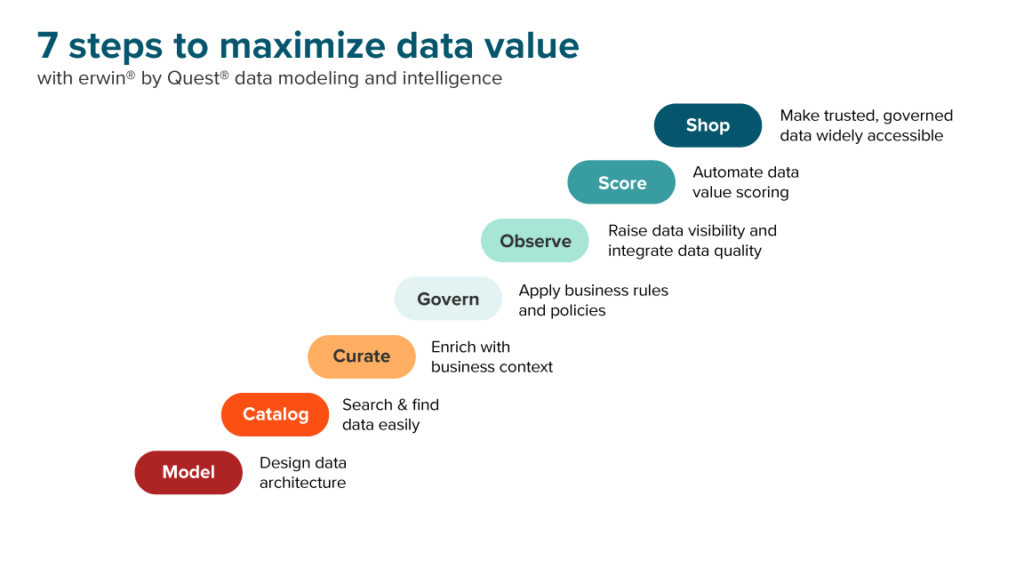

7 steps to achieve data maturity and maximize data value

Maximizing data value can come around easily by taking steps towards data maturity. The most successful implementations we see focus on relevant use cases and offer incremental points of value in the first 90 days on through to 6-9 months.

Do not operate in the underground waiting for perfect. You need to know early and often what’s resonating and the only way to do this to deliver iterative use cases to the user community. For each of the seven following steps, it’s suggested to have a road map of use cases and milestones.

Model

Start out by determining the blueprint of your environment. What are the logical and physical aspects of your data and what do want it to look like? Most larger organizations have been modeling out their data from a business perspective for decades. Smaller or newer businesses are starting with undefined business definitions, business lineage, PI and are uncertain of what their critical data should be. On top of that, smaller organizations are starting with an empty business glossary and catalog, as well as working to pre-structure data domains which are key to understanding data from a business context.

Regardless of the size of a business, data maturity starts with data modeling and an understanding of the organization’s business perspective towards data and its data architecture.

Catalog

Cataloging data is really creating an inventory of the data. Like it or not, this inventory of data is the underpinning of your organization. It’s what you are driving your business on. Value use cases here have everything to do with data forensics. The inventory reveals where you have data bloat, redundancy and dark data. The value use cases in this stage usually start with categorizing and profiling the data. If you are in a data migration or integration project, you will be able to see where you have data in isolation (if there’s not tie to the business, do you need this data?) and critical data that needs to be profiled, tagged and categorized.

Curate

Data value really comes alive once data is curated and contextualized. In essence, understanding how the data is used from a business standpoint. Data without context is just a bunch of numbers. It renders itself meaningless immediately if you cannot tie that data back to business value. Once we have the business controls around the critical data, we want to “assure” that the data stays relevant and clean. The key to governing this is end user adoption. Can users quickly find what is need, and can I “govern” data without it being an additional barrier? We want users to be inquisitive and find their answers, promote their insights and collaborate without feeling like it’s a laborious extra step to their day job.

Govern

Providing the business controls around the data is what we are doing here. Whether it is a business term, which provides context for the data, or a policy, regulation or business rule which gives you the guardrails of how to use this data, it’s establishing where and how the data is to be used. We have done this for traditional regulatory requirements (Sarbanes Oxley, BCBS, Libor Rates, GDPR and Privacy Information) and now responsible AI or the AI Bill of Rights. The right to know when AI shows up, privacy, and opting out is very similar to governance adherence we have been doing all along.

Observe

Data lineage. Whether it’s a POC or an implementation, this is where the “mic drops.” Being able to quickly trace and track data from end to end offers value from a data quality, root cause analysis and just being “explainable” to your privacy and regulatory policies. It provides value to traditional use cases as well as modern use cases where you now need to know things like: is this ChatGPT using AI? Did your last AI validation check include understanding of what datasets were used and if they had a high degree of data quality?

Score

Scoring gives you a quick indication as to the value of the data. This is an algorithm that combines data profiling results, end user rankings and completeness of the data governance curation into a “gold, silver, and bronze” data classification score. You as an organization can choose which facets you wish to use and how much weight to give each facet. The marketplace does the rest. We fully expect clients to want to add more facets and welcome this expansion. We credit Douglass Laney and the book “Infonomics: How to Monetize, Manage, and Measure Information as an Asset for Competitive Advantage” with the first three criteria.

Shop

Finally, are you ready to redistribute data in a shopping cart for end users? Do you need a safe, intelligent front door to your data? Are users able to request and pull data directly from a variety of database environments with internal guardrails? A data marketplace is collective intelligence about your internal datasets, third party data, reports, AI models, API’s, etc. It’s a one stop shop for all your data needs. When you have a safe and trusted marketplace, end users will start to form a community around the data and participate in keeping the data from becoming stale and unused. It will help to build responsibility for data and its accuracy across the organization instead of relying on one pocket of IT folks who will “fix everything.”

Why the data maturity conversation continues to be crucial

ChatGPT is one of the levers pushing everyone to be ready for data at scale. Every organization is looking to understand how your different domains are using AI, the insights they are trying to achieve with these models and the data behind them.

DemandSage “ChatGPT Statistics” Users, trends and more 2023 – Daniel Ruby

The ChatGPT frenzy is shining light on how much we as humans don’t have a handle on. There are countries like Italy and—perhaps soon Germany—that have banned ChatGPT. In America, AI leaders have assembled and asked congress to put a pause on AI. GDPR for AI requires that end users know when something was written by AI. As a data leader, it is up to us to start defining policies and working with organizations to decide what is permissible.

Providing structure and creating data products that are fully governed is a great way to balance risk with disruption. Because this is the problem, right? How do companies balance the risk of bias and privacy while deriving useful insights?

Understanding where data is being provisioned and how—and to what—degree of quality is essential to ensure the integrity of any data product. Offering up data as a product in a marketplace environment provides trust and assurance that is essential to insightful, trustworthy results. Great data leaders recognize the need for change as they know the pain of the current data environment and can see the promise of the future with a strong self-service offering to promote throughout an organization.