Every boardroom in the world is having a similar conversation: “What’s our AI strategy?”

But maybe they should step back and ask a different question: “What’s our AI failure strategy?”

Because while everyone is racing to implement AI, the truth is that there’s an 80% AI project failure rate – twice the rate of traditional IT projects, according to RAND Corporation’s 2024 research. Even more alarming? S&P Global’s 2025 survey found that 42% of companies abandoned most of their AI initiatives this year, up from 17% in 2024.

The average organization scraps half of their AI proof-of concepts before they reach production. Gartner reports that only 48% of AI projects make it past pilot, and at least 30% of generative AI projects will be abandoned after proof of concept by the end of 2025. And, of course, there’s the MIT study that’s received a lot of attention because it says 95% of GenAI pilots are failing.

The economic impact? With global AI spending projected to reach $630 billion by 2028 according to IDC, we’re looking at the AI project failure rate representing hundreds of billions in wasted investment, lost productivity and missed opportunities.

This isn’t just a technology problem. It’s an organizational crisis hiding in plain sight.

Beyond “bad data”: Three hidden culprits behind the AI project failure rate

The 80% AI project failure rate can’t be blamed solely on poor data quality. While bad data is a known issue, deeper organizational and cultural challenges often sabotage AI initiatives. According to RAND’s research, interviews with data scientists and engineers highlight that organizational and cultural issues are among the leading causes of AI project failure.

There are three hidden culprits of AI failures: a trust deficit crisis, an organizational readiness gap, and a governance paradox. Each contributes to the staggering AI project failure rate, revealing why traditional approaches fall short and what must change to drive AI success.

1. The trust deficit crisis

Your data scientists don’t trust your data. Your business users don’t trust your AI outputs. Your board doesn’t trust your AI governance.

It’s a vicious cycle:

- Data scientists spend most of their time cleaning and validating data instead of building new models

- Business users revert to gut decisions when AI recommendations seem questionable

- Executives hesitate to scale pilots when they can’t explain how the AI works (or even worse… if it can be trusted)

The Reality Check: Quest’s 2024 State of Data Intelligence Report found that 37% of organizations cited data quality as their top obstacle to strategic data use, followed closely by issues with information in silos (24%) and trust in data (19%).

2. The organizational readiness gap

Many companies are structurally designed to resist AI success. I know, it sounds really weird to say about something that is so crucially important to future success and competitive advantage, but they have:

- Siloed data ownership that makes integrated AI impossible

- Waterfall governance that can’t keep pace with agile AI development

- Success metrics that reward individual department wins over enterprise value

- Cultural antibodies that attack any threat to the status quo

3. The governance paradox

Traditional governance would suggest: “Lock everything down, document everything, approve everything.”

AI innovation is on the other side saying: “Move fast, experiment constantly, fail quickly.”

This fundamental conflict means that:

- The most governed organizations often have the least AI-ready data

- Compliance-focused frameworks create friction that kills innovation

- Risk management becomes risk paralysis

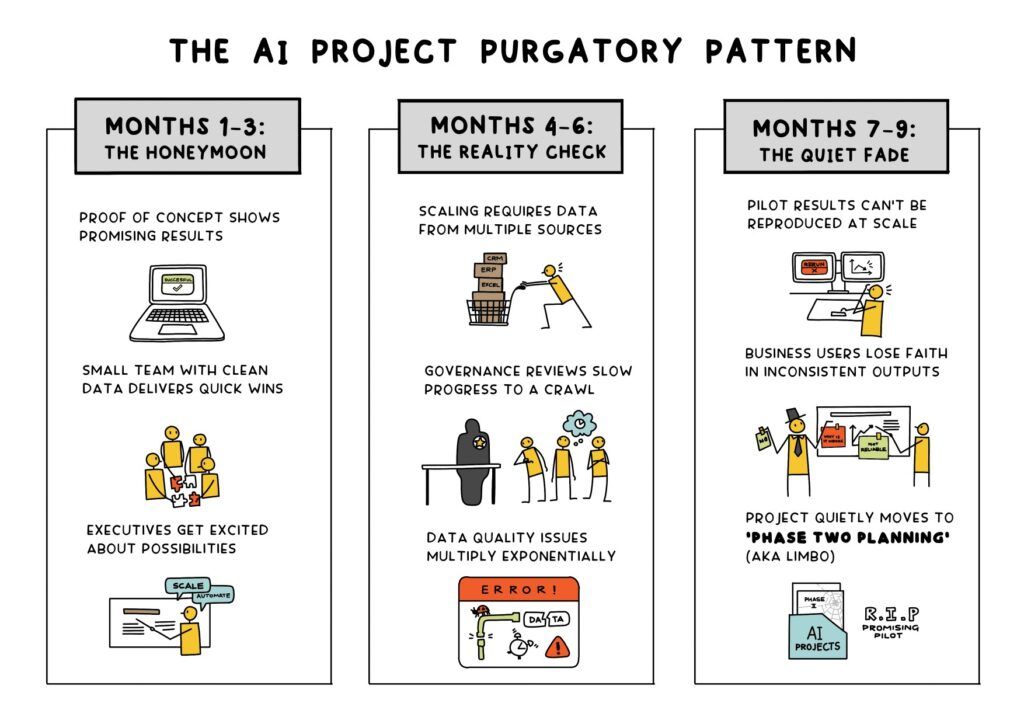

The AI project purgatory pattern

Here’s how the failure pattern typically unfolds.

This pattern is so common that Gartner found it takes an average of eight months to go from AI prototype to production in a world where executive boards are pushing for speed-to-market and quick returns… and that’s for the projects that actually make it to production.

Why traditional approaches are failing

Conventional wisdom would say: First, fix your data. Then, implement governance. Finally, build AI.

This sequential approach is prone to failure because:

- Perfect data is a myth: By the time you’ve fixed your data, the business has moved on.

- Governance as a gatekeeper: Traditional governance adds friction without adding value.

- AI as an endpoint: Treating AI as a destination rather than a journey is way more likely to ensure you’ll never arrive.

McKinsey’s 2025 AI survey confirms that organizations reporting “significant” financial returns are twice as likely to have redesigned end-to-end workflows before selecting modeling techniques. It’s not about technology… it’s about the approach.

The path forward: Thinking differently

The organizations that are going to succeed with AI the fastest aren’t the ones with a complete repository of perfect data. They will:

- Build trust incrementally through transparency and measurement, not perfection

- Embed governance into workflow rather than layering it on top

- Create data products, not data projects that can be reused and scaled

- Embrace trusted AI-ready data with clear understanding of its limitations

The bottom line

An 80% AI project failure rate doesn’t need to be your fate. But avoiding it requires more than better technology or cleaner data. It requires fundamentally rethinking how your organization approaches data trust, governance and value creation.

The question isn’t whether or not you will invest in AI. The question is whether or not you’ll invest in AI success… or just add to the growing pile of expensive AI failures.