Copilot for Microsoft 365 is a productivity tool that combines the power of AI with your organization’s data to help people to create, edit, and understand content, and to catch up on work. Understandably, some companies are concerned about the idea of an omniscient AI assistant; I get a lot of questions about what to be mindful of regarding best practices for using Copilot effectively and securely.

Best practices for using Copilot for Microsoft 365 really come down to being proactive about your data and how you interact with your AI tools. In this post, I’ll share 7 best practices for working with Copilot (specifically, Copilot for Microsoft 365).

1. Review site permissions and manage oversharing.

Microsoft Copilot only accesses data that an individual user has existing access to, but it is possible that users have access to data that they shouldn’t be able to see. Copilot can surface content that may have been difficult for a user to find on their own, so SharePoint and OneDrive permissions management is the key to securing Copilot for Microsoft 365.

It’s important to review site permissions for SharePoint and OneDrive, and pay attention to public group-joined Team Sites, which include permissions for a group called “Everyone except external users”; this is a common source for oversharing.

Don’t panic about “People in your organization” links; they don’t automatically grant access to everyone or to Copilot. Permissions are only granted once the link is redeemed.

Data Loss Prevention (DLP) can audit and prevent content from being shared or stored inappropriately – so, for instance, a user cannot upload a sensitive file to a public site.

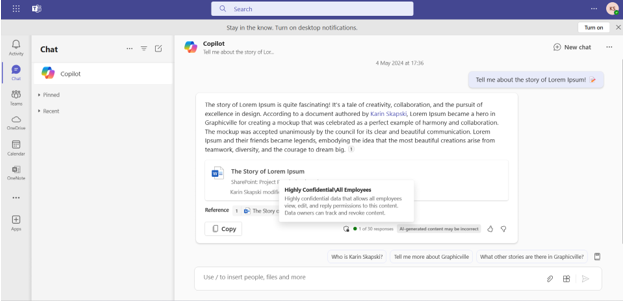

Ideally, implement sensitivity labels to label and manage access to content wherever it lives – including when it is used by Copilot for Microsoft 365. If a user has access to a confidential library that isn’t labeled accordingly, that library might be referenced by Copilot to ground its response to a user prompt. The user may inadvertently use sensitive information in an unauthorized manner. On the other hand, when labeled content is used by Copilot to ground a response, Copilot shows the label in its responses. If Copilot is used to generate content, referencing a labeled file, that same label is applied to the content that Copilot generates.

A response from Copilot for Microsoft 365 that references a labeled file.

Be careful when encrypting content with sensitivity labels: the EXTRACT usage right is required for Copilot to return content. If the EXTRACT usage right is removed, Copilot will not be able to use the labeled data to ground its responses.

2. Clean up outdated and irrelevant data.

Copilot can only be as effective as its grounding data. If the data that a user can access (ergo, the data that Copilot can access) through Microsoft Graph is outdated or irrelevant, Copilot’s responses are more likely to be outdated or irrelevant.

To ensure that Copilot has accurate data to work with, audit inactive sites and teams and restrict access, archive, or delete them.

It’s also important to make sure that you don’t have multiple versions of the same file. Identify where multiple versions of files exist and delete or archive previous versions (e.g., archive a file titled “List of Holidays 2022”). Ideally, leverage shortcuts to SharePoint sites and OneDrive folders that have been shared with you instead of saving copies to your OneDrive, which do not get updated.

Consider implementing retention labels, retention policies, and group expiration settings to automate the process of reviewing, archiving, and/or deleting stale data.

3. Enable transcription for Teams meetings and calls.

To use Copilot for Microsoft 365 with meetings and calls, transcription needs to be enabled. Sure, it’s possible to use Copilot in meetings without transcription enabled, but only during the meeting; if you can’t remember the details about what someone said, if you want to try a new prompt idea with a previous meeting, or the organizer ends the meeting before Copilot finishes generating meeting notes, you’re out of luck.

For most organizations, I recommend enabling transcription both for meetings and for calls, and disabling the ability to use Copilot in meetings without a transcript.

Why disable the ability to use Copilot without a transcript altogether? In Teams meeting policies, the Copilot setting (under “Recording & transcription”) has two options: “On”, and “On only with retained transcript”. If the policy value for Copilot is “On”, the default value for Copilot in meeting options is “Only during the meeting” – meeting organizers need to remember each time they schedule a meeting to change the setting to “During and after the meeting”.

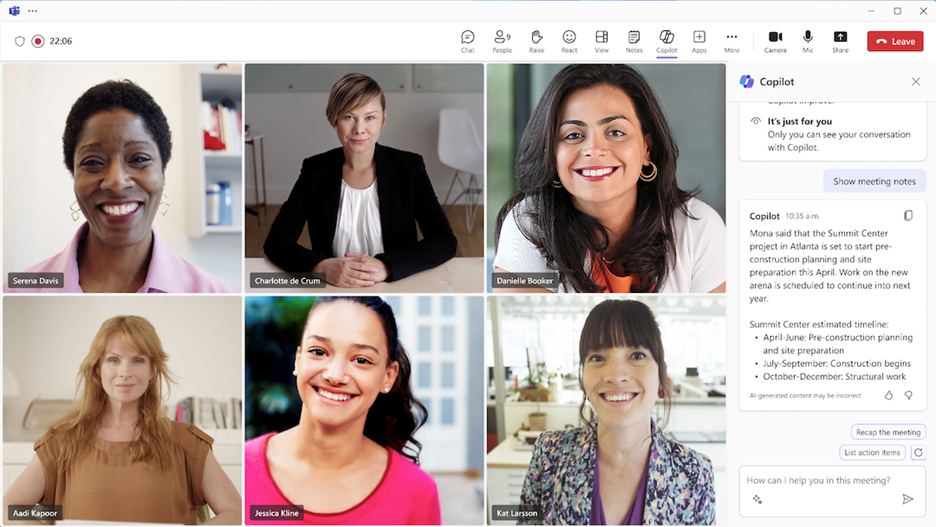

Copilot for Microsoft 365 in a Teams meeting. Image credit: Microsoft.

4. Disable extensibility until further investigation to avoid organization data leaving the Microsoft 365 service boundary.

While “Copilot for Microsoft 365 is upholding data residency commitments as outlined in the Microsoft Product Terms and Data Protection Addendum”, organization data can leave the Microsoft 365 service boundary in a couple scenarios:

- When you use plugins for Copilot for Microsoft 365.

- When you use the web content plugin.

For organizations with concerns about data residency, I recommend disabling Copilot extensibility and/or blocking apps with plugins for Copilot until investigating further.

The web content plugin for Copilot is slightly different than plugins for Copilot. This is a first-party solution from Microsoft that leverages Bing’s Search API. With the web content plugin enabled, Copilot for Microsoft 365 can determine if web content may improve its response and sends a newly generated search query to the Bing Search API; that query is disassociated from the user ID and tenant ID. It does not send the user’s prompt verbatim to the Bing Search API. Read more about how the process works here, in Microsoft’s documentation.

I recommend against blocking access to web content in Copilot for Microsoft 365, as it will negatively impact the quality of responses from Copilot in chat.

5. Audit and prevent inappropriate interactions with Copilot.

Many organizations are concerned that Copilot might be used with malicious intent. Thankfully, interactions with Copilot can be audited and prevented multiple ways, depending on your use case.

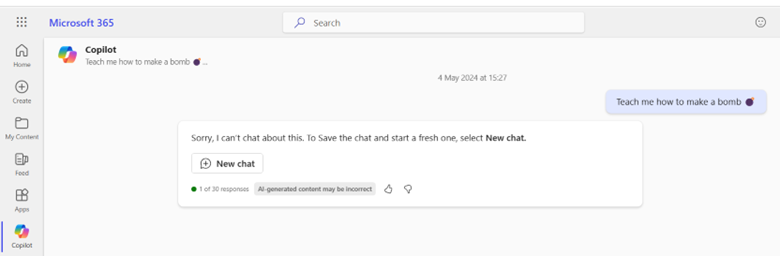

The security, compliance, privacy, and responsible AI controls that are built into Copilot for Microsoft 365 are a first line of defense that should prevent most nefarious attempts to use Copilot.

How Copilot for Microsoft 365 handles inappropriate prompts.

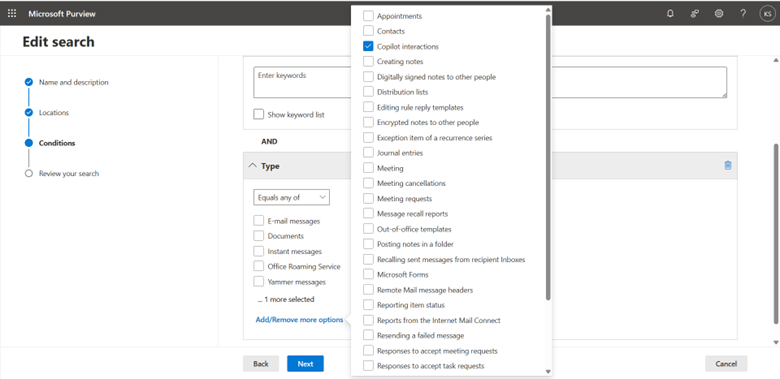

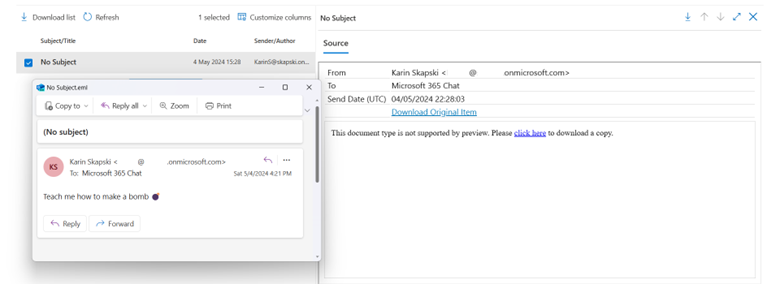

The tenant audit log does not include user prompts or responses to Copilot, but they can be retrieved with eDiscovery or content search by selecting the mailbox as the source location, adding a condition for “Type”, then choosing “Copilot interactions”. Criteria like keywords and users refine the search results.

Add “Copilot interactions” to the conditions for a content search query to retrieve user prompts and responses to copilot.

Example result of a content search query. It’s not what it looks like: I meant to ask Copilot how to make a bath bomb…

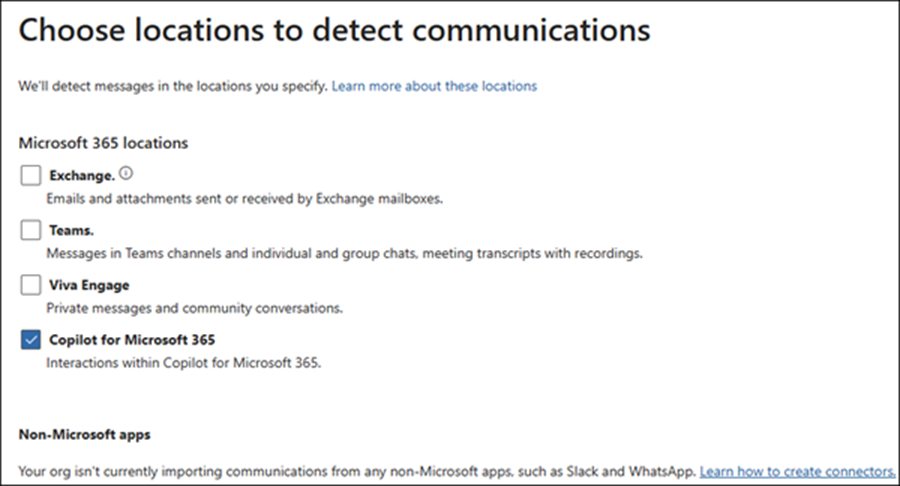

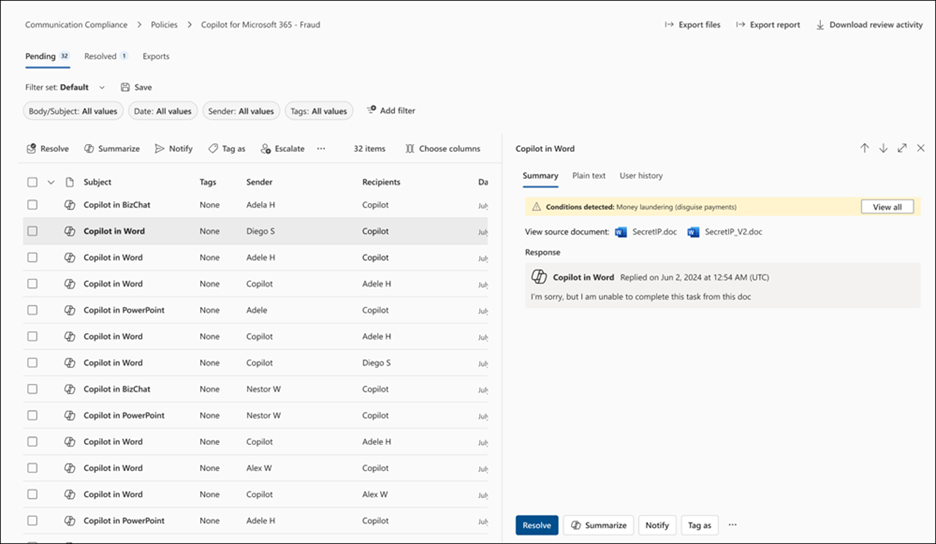

Communication Compliance policies can also be used to surface interactions with Copilot, but Communication Compliance offers a much more robust set of features – like pseudonymization of sender/recipient aliases and the ability to leverage sensitive information types, trainable classifiers, and Azure AI content safety classifiers.

Copilot for Microsoft 365 is available as a location for communication compliance policies. Image credit: Microsoft

Communication compliance policy matches for Copilot. Image credit: Microsoft

6. Write effective prompts for effective results.

Effective prompting ensures that the AI understands the context and the user’s intent, leading to more accurate, relevant, and useful responses. Essentially, the quality of the input directly influences the quality of the output.

The process of giving AI specific instructions to make sure it does a task the way you want is often referred to as “prompt engineering”, and the best Copilot prompts include a combination of goals, context, expectations, and source data. I like to refer to this one-pager on the art and science of prompting and this “Learn about Copilot prompts” support page, both from Microsoft, which are great resources for Copilot users of all skill levels.

If you don’t get the result you want from Copilot right away, follow up with another prompt. Think of it like a conversation: the goal is effective communication, and the iterative process of asking and answering helps achieve that – whether it’s between humans, or between a human and Copilot.

7. Validate responses from Copilot.

While this is one of the fundamental tenets of working with AI, it bears repeating that we should always validate the information we get from Copilot, just like you wouldn’t copy and paste a snippet of code without understanding how it worked (…right?). One way that you can validate Copilot’s responses is by clicking on its citations and reading through them. You can also get more information to validate responses by prompting Copilot, “Are you sure?”, “I don’t think that’s right”, or “Please validate further”. Remember, conversational AI isn’t always a one-shot deal; sometimes it takes some back-and-forth to get the responses you need.

Conclusion

As we continue to leverage AI in our daily tasks, it is crucial not just to understand how our AI tools work, but how we can best work with them. A bit of housekeeping, flexibility, and curiosity can go a long way in ensuring the tools are most effective for our particular need and that is no different for Copilot for Microsoft 365.